Spesland andOrbit Engineering Announce Strategic Partnership to Drive GenAI Adoption in Smart Infrastructure

Original Source: https://towardsai.net/p/machine-learning/15-leading-cloud-providers-for-gpu-powered-llm-fine-tuning-and-training

Demand for building products with Large Language Models has surged since the launch of ChatGPT. This has caused massive growth in the computer needs for training and running models (inference). Nvidia GPUs dominate market share, particularly with their A100 and H100 chips, but AMD has also grown its GPUoffering, and companies like Google have built custom AI chips in-house (TPUs). Nvidia data center revenue (predominantly sale of GPUs for LLM use cases) grew 279% yearly in 3Q of 2023 to $14.5 billion!

Most AI chips have been bought by leading AI labs for training and running their own models, such as Microsoft (for OpenAI models), Google, and Meta. However, many GPUs have also been bought and made available to rent on cloud services.

The need to train your own LLM from scratch on your own data is rare. More often, it will make sense for you to finetune an open-source LLM and deploy it on your own infrastructure. This can deliver more flexibility and cost savings compared to LLM APIs (even when they offer fine-tuning services).

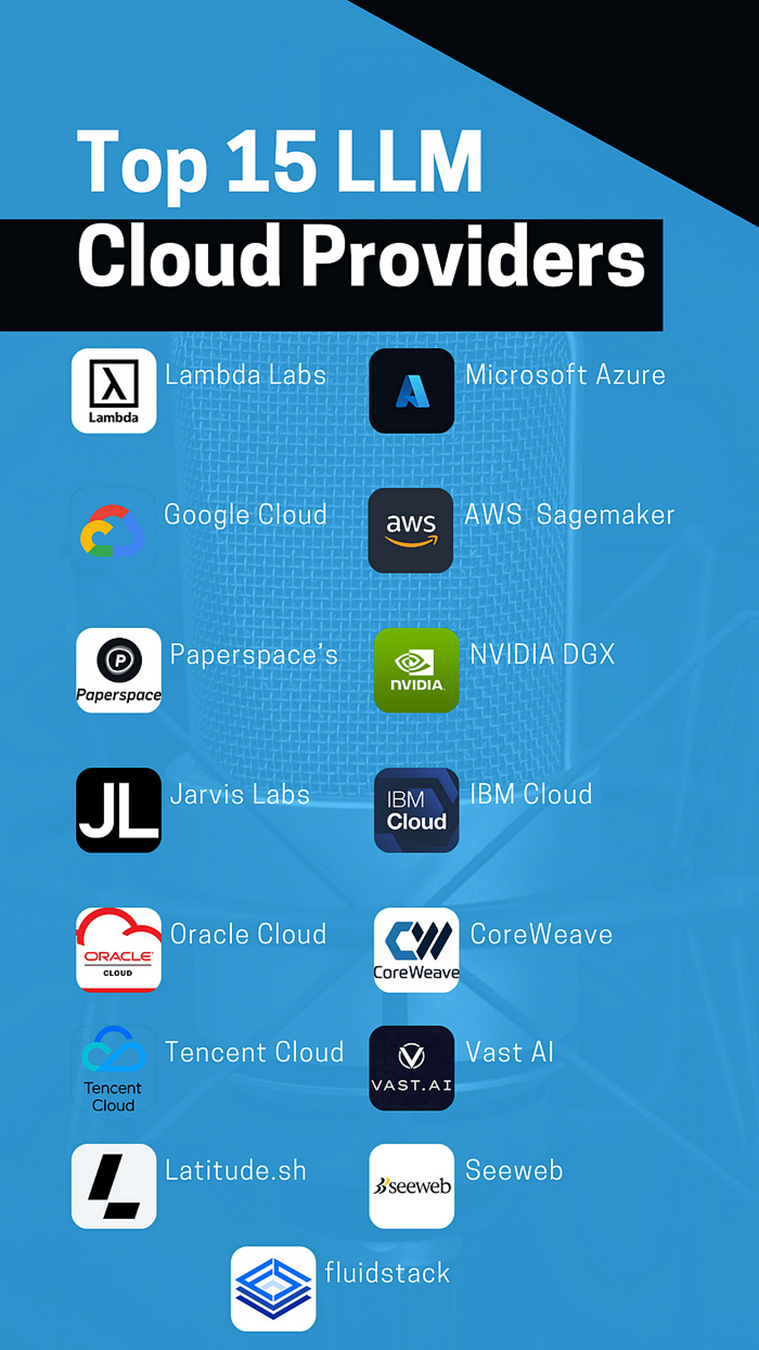

This guide will provide an overview of the top 15 cloud platforms that facilitate access to GPUs for AI training, fine-tuning, and inference of large language models.

1.Lambda Labs

Lambda Labs is among the first cloud service providers to offer the NVIDIA H100 Tensor Core GPUs — known for their significant performance and energy efficiency — in a public cloud on an on-demand basis. Lambda’s collaboration with Voltron Data also provides accessible AI computing solutions focusing on availability and competitive pricing.

Lambda’s other offerings include:

• NVIDIA GH200 Grace Hopper™ Superchip-powered clusters with 576 GB of coherent memory.

• Weights & Biases integration to accelerate model development for teams.

Lambda’s cloud services simplify complex AI tasks such as training sophisticated models or processing large datasets. The platform enhances LLM training efficiency for large-scale projects requiring substantial memory capabilities.

2.Microsoft Azure

Microsoft Azure provides a suite of tools, resources, guides, and a selection of products, including Windows and Linux virtual machines.

Azure enables:

• An easy setup and the safety of content.

• A remote desktop experience, accessible from any location for convenience and security.

• Implementation of advanced programming and natural language processingtechniques for various applications such as speech-to-text, text-to-speech, and speech translation services, as well as natural language understanding and machine translation features.

Microsoft Azure employs AI to analyze visual content and accelerate the process of extracting information from documents. The platform also uses AI to ensure content safety, simplifying operations and management from cloud to edge.

Fig: AZUR GPU Pricing

3.Google Cloud

Google Cloud’s AI solutions offer a range of AI-powered tools for streamlining complex tasks and enhancing efficiency. The platform also provides customization capability for democratized access to advanced AI capabilities for businesses of various sizes. Additionally, Vertex AI streamlines the training of high-quality, custom machine-learning models with relatively little effort or technical knowledge.

Google Cloud’s other features include:

• Pre-configured AI tools for tasks such as document summarization and image processing.

• A platform to experiment with sample prompts and create customized prompts.

• Functions to adapt foundation and large language models (LLMs) to meet specific needs.

• Over 80 models in the Vertex Model Garden, including Palm 2 and open-source models such as Stable Diffusion, BERT, and T-5.